Increasing CVM RAM

A couple of weeks back we did a cluster expansion. The cluster expansion process succeeded without any issues. The first block containing four nodes was during cluster formation configured with 20 GB of CVM RAM.

The new CVM was configured to the by support recommended value of 32 GB of CVM RAM. So after the expansion was done we had different CVM RAM size. So how to fix the issue? By performing the steps below.

Check cluster health

The first thing was to make sure that there was not any unknown issues in the cluster by running various checks.

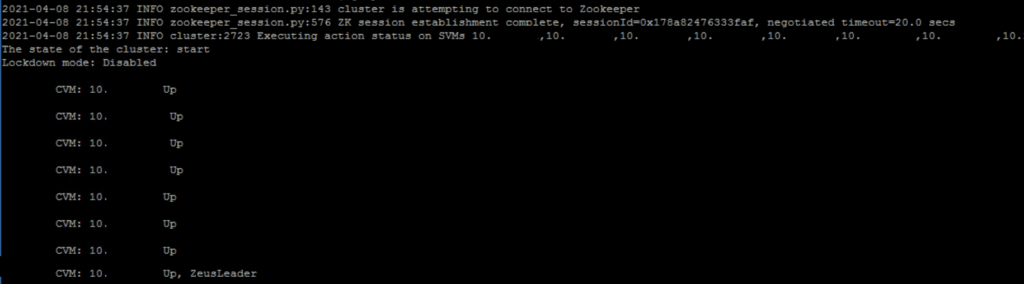

First lets check that all the CVMs in the cluster are up.

cs | grep -v UP

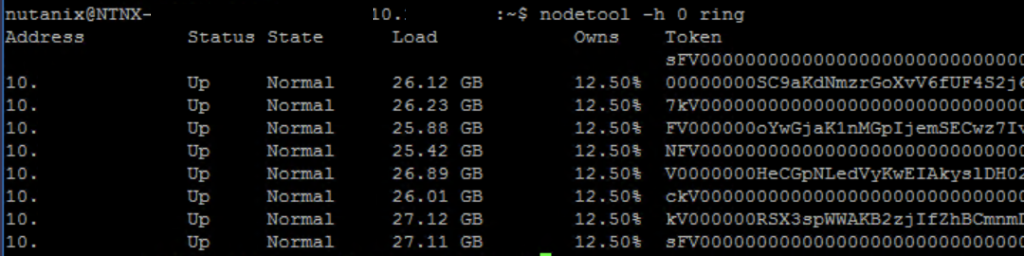

Then we continue with investigating the Metadata ring, and that all the CVMs displays as normal in the ring.

nodetool -h 0 ring

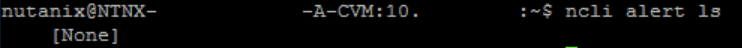

Lets check if we have any active alerts in the cluster.

ncli alert ls

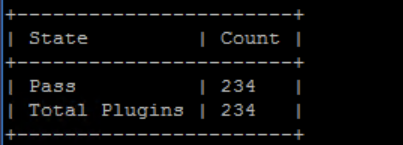

Last but absolutely not least lets run a ncc health_checks run_all to verify the overall status of the cluster.

ncc health_checks run_all

So now we have determined that we have a healthy cluster we can continue with the CVM memory increase.

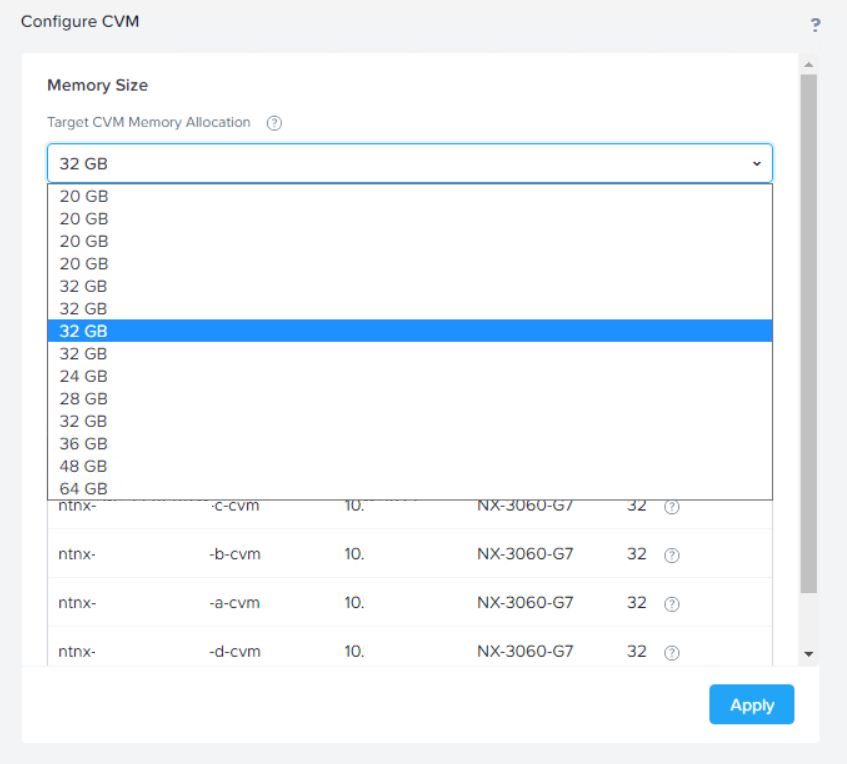

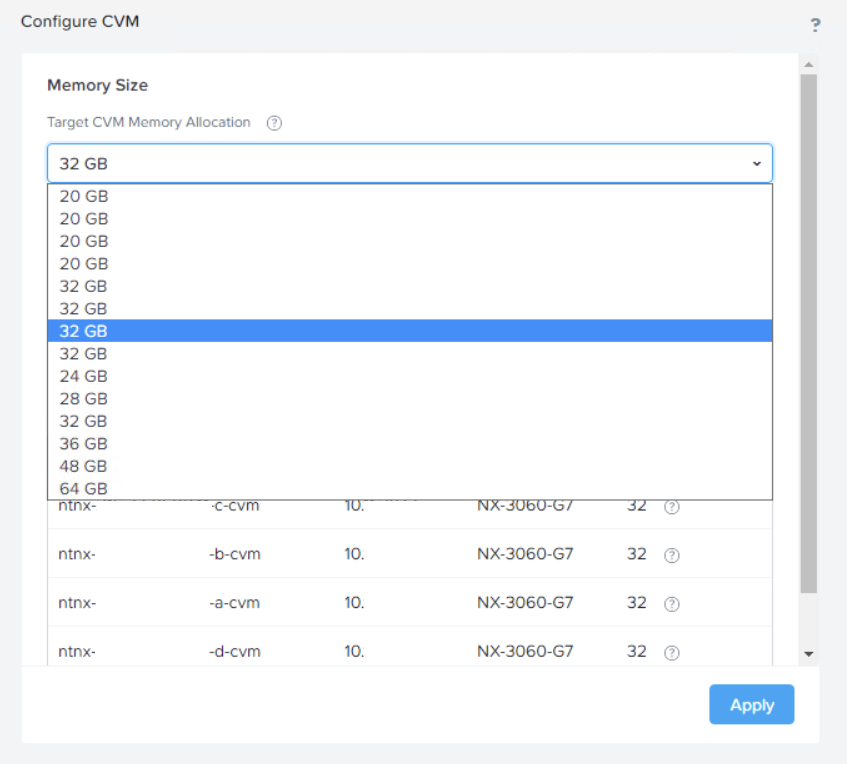

So now we go to the Prism Element interface of the affected cluster and go to Settings -> Configure CVM, in the drop-down list chose the new amount of CVM RAM memory.

Then we did monitor the rolling memory upgrade and reboot of the CVMs. During the rolling reboot you may receive alerts regarding CVM reboots or resiliency status on the dashboards, and this is normal.

During the reboot of a CVM another CVM will handle the missing CVMs tasks such as I/O operations etc, meaning the operation is possible to do during production. The user VMs will NOT be affected by the CVM reboots, and the user VMs will remain on the same physical host during the whole process without disruption.

After the task in completed, please make sure that the resiliency status goes back to normal, and do all the health checks again to make sure your cluster is healthy. Job well done :)

To read more about CVM memory configuration, please se the official documentation on the support portal here: https://portal.nutanix.com/page/documents/details?targetId=Web-Console-Guide-Prism-v5_19%3Awc-cvm-memory-configuration-c.html&a=4fcf059439e02f360825755b32bff0890fe164a495159c55561daefca4e0c18f90832370427202a6